Are you a programmer? Want to do something for the environment and even make the world a better place? Then start optimizing your code!

Are you a programmer? Want to do something for the environment and even make the world a better place? Then start optimizing your code!

It seems like today the solution to most software performance issues is to throw more hardware at the problem instead of making the software run faster on existing hardware. Doing more with less is a forgotten mantra, and Wirth’s Law continues to ring true:

Software is getting slower more rapidly than hardware becomes faster.

Jeff Atwood has an interesting article in his Coding Horror blog regarding the value of code optimization versus just buying more hardware. His argument is that since hardware is cheap compared to programmer salaries, the first step to make software run faster should always be to buy more hardware. We recommend you read the whole thing (it’s an interesting read).

He rounds off the article with this recommended approach:

- Throw cheap, faster hardware at the performance problem.

- If the application now meets your performance goals, stop.

- Benchmark your code to identify specifically where the performance problems are.

- Analyze and optimize the areas that you identified in the previous step.

- If the application now meets your performance goals, stop.

- Go to step 1.

This makes perfect sense if you actually go through all the steps and really do optimize after the initial short-term fix of buying more hardware.

HOWEVER, it is all too common that companies don’t take code optimization seriously enough and never go beyond step 3 above. The solution will more or less always be to throw more hardware at the problem.

In addition to this, a lot of programmers simply assume that it’s ok to demand more powerful hardware for their software to run well and don’t put much effort into doing more with the same resources.

These two things combined give us an environment where increasing amounts of increasingly powerful hardware is being used as a crutch to compensate for the poor performance of our software.

Four BIG problems

Here is why today’s tendency to simply throw more hardware at software performance problems is unhealthy and shortsighted:

- You do not leverage anywhere close to the full potential of your hardware.

- You end up with more hardware, which consumes more electricity, which will cost more in the long run (especially if you run a large-scale operation).

- Using more electricity is not just a cost issue, it’s bad for the environment.

- More hardware means more components, which in turn is bad for the environment.

Saving costs is often used as an argument for not putting in the time to make code run faster, but there are plenty of costs on top of the purchase price to take into consideration when you add more hardware; increased power consumption, sys-admin resources and future scaling issues should also be taken into account.

The implications of more efficient code

What if we could double overall code efficiency? (Don’t say it isn’t possible.) That would mean a huge reduction in the amount of hardware that companies would need to run their operations, especially on the server side (since office workers would still need a computer each).

Imagine your web servers being able to handle twice the requests they are today. Imagine modern top-of-the-line software running fine on modest, even old, hardware. It’s a nice thought, isn’t it?

Hardware manufacturers might not be all too happy with that development, though… But here is why we shouldn’t care about that:

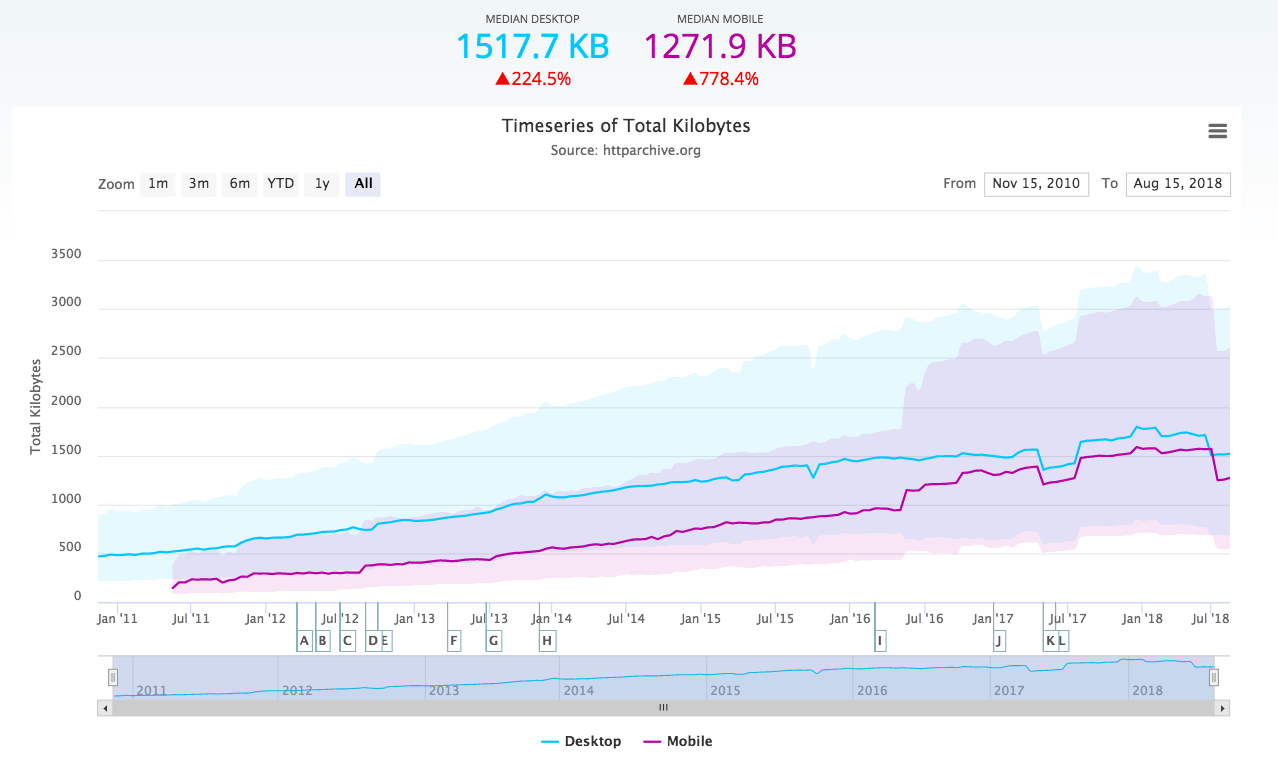

In 2005, servers consumed as much power in the United States as televisions, and that was four years ago! We don’t know what the full carbon footprint of the world’s servers (and other hardware, it’s all running software!) actually is today, but it is bound to be significant and growing.

Why needing less powerful hardware is a Good Thing

There is another very important benefit that would come from a more efficient code base: The faster our code is overall, less powerful hardware is needed to run common applications, which in turn would make it possible to create more affordable computers. This would be a huge benefit to third-world and developing countries, not to mention less fortunate people in the industrial nations.

Please get that old-school mentality back

Huge gains in performance can be made from effective, competent optimizations of algorithms and code. Even when you think your code is fast, it can usually be made to perform several times faster with the right approach.

To give you some examples, just look at the impressive performance gains between first-generation games for game consoles and those released a couple of years into the life-cycle of that same console, or the things coders were able to make C64 and Amiga computers do back in the day. These are examples where software performance was improved by leaps and bounds without resorting to hardware upgrades.

The Coding Horror article we mentioned above has a quote from Patrick Smacchia (from CodeBetter.com), where he observes how Amiga programmers were able to increase software performance by an incredible 50 times in the time frame of just a few years by continuously challenging themselves on the same hardware.

Do more with less

If doing more with less was a more valued mindset in software development, we would all reap substantial long-term benefits. We would need less (and less powerful) hardware, we would save money, we would save power, and in doing so, we would help save the environment.

Think about that.